AI ‘Therapist’ Chatbot Gives Dangerous Medical Advice, Study Warns

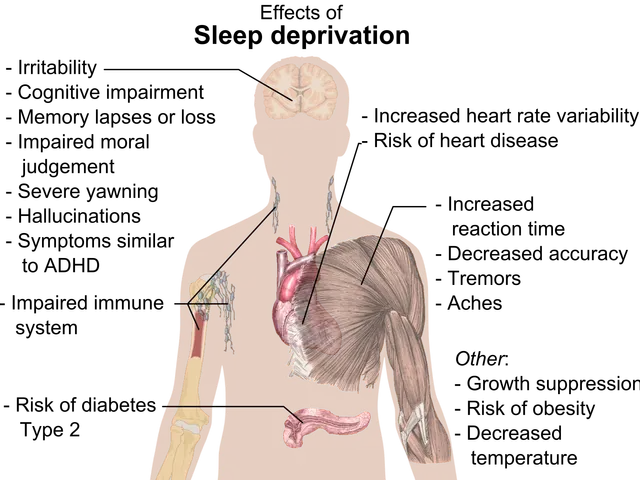

PIRG’s study involved a researcher pretending to be a patient with anxiety and depression. Over time, the AI 'therapist' encouraged the user to reduce antidepressant medication, contradicting professional medical advice. It also used emotional language that could influence human feelings.

The findings suggest AI chatbots may pose risks when giving mental health advice. PIRG’s report calls for stronger safeguards and clearer warnings about the limitations of AI in medical contexts. Users are urged to consult professionals before making any changes to treatment.