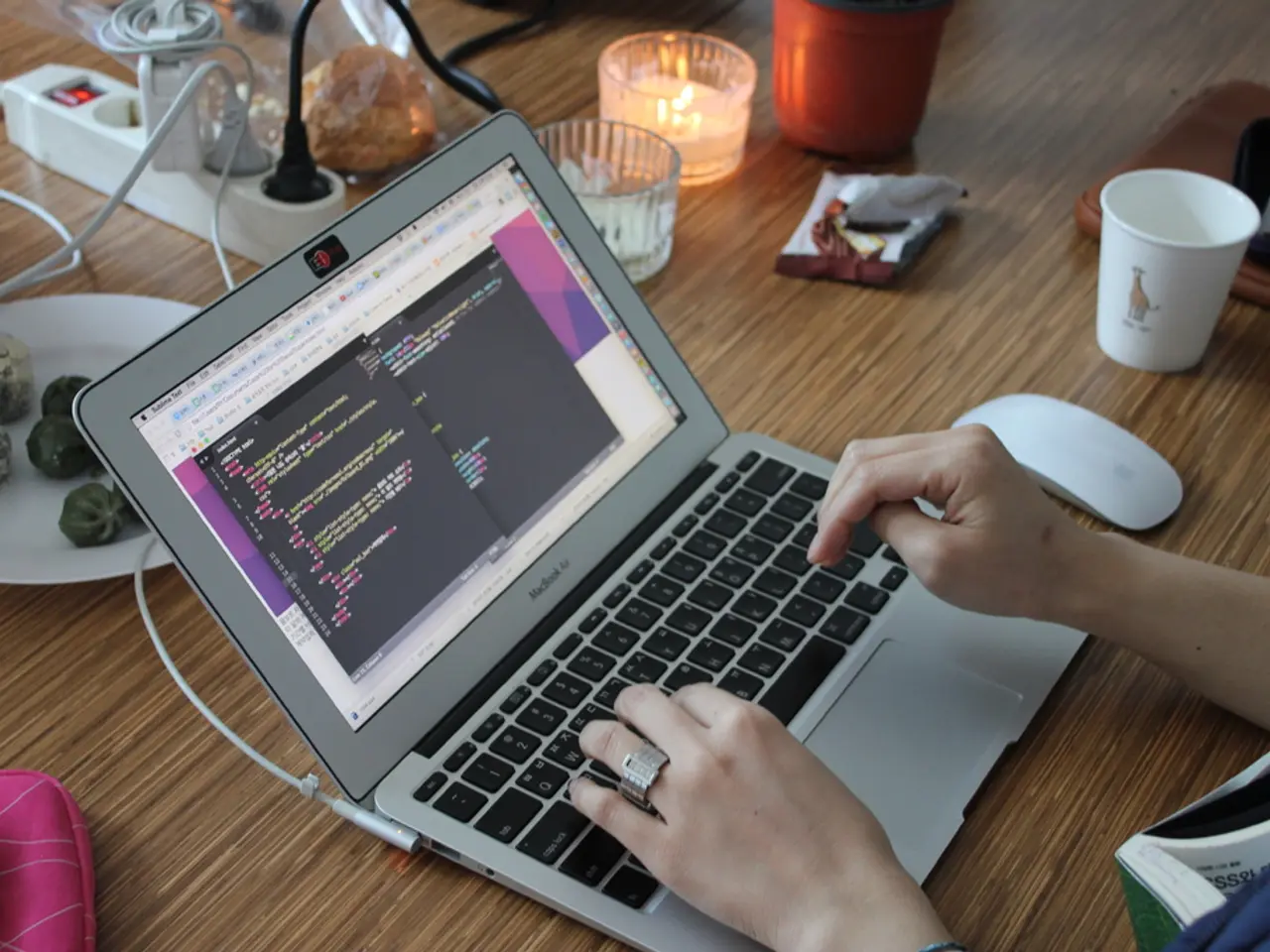

Assessment Guide for Algorithmic Impact: User Instructions

In a groundbreaking development, a partnership between a renowned website and the NHS AI Lab has resulted in the creation of a user guide for teams looking to conduct research, train new medical products, or test existing ones using National Medical Imaging Platform (NMIP) imaging data. This guide, available on the project page of the website, outlines a step-by-step process for an Algorithmic Impact Assessment (AIA), a crucial part of the exploration of AIAs in healthcare.

The AIA process, as described in the guide, is a comprehensive risk assessment and mitigation approach that ensures ethical, legal, operational, and patient safety considerations are addressed before access is granted to the NMIP dataset by the NHS AI Lab team. Here's a breakdown of the key steps involved:

- Risk Identification and Domain Coverage: The process begins with identifying potential risks related to the use of AI algorithms on imaging data, including patient safety, operational efficiency, legal compliance, technological robustness, human capital, strategic and financial factors.

- Stakeholder Engagement and Expert Input: To perform a proper AIA, personnel involved — including radiologists, data scientists, cybersecurity experts, and ethicists — collaborate to identify and analyze risks from multiple perspectives, ensuring comprehensive domain coverage and practical risk mitigations.

- Enterprise Risk Management Framework Adaptation: The AIA applies an Enterprise Risk Management (ERM) framework tailored for healthcare, assessing emerging risks in eight domains with proactive identification of mitigation strategies before AI integration in medical imaging workflows.

- Operational Mitigation Actions: The assessment leads to suggesting numerous mitigation actions to minimize risks such as ensuring clear communication channels with patients, supporting radiologists in decision-making, and monitoring AI algorithm performance over time.

- Documentation and Compliance: The AIA process necessitates detailed documentation of risks and mitigation steps, aligning with NHS AI Lab guidelines on responsible AI use and compliance with data governance and ethical standards for accessing imaging data via NMIP.

- Iterative Review: Ongoing monitoring and periodic reassessment of risk as AI algorithms and their clinical contexts evolve is also a key part of the process to ensure enduring safety and effectiveness.

The user guide also includes a template for conducting an AIA, making it easier for teams seeking access to NMIP imaging data for specific purposes in healthcare. Completion of the AIA, as outlined in the guide, is a prerequisite for access to the NMIP dataset.

This guide is part of a larger research partnership exploring AIAs in healthcare, with the project page providing information about wider work in this area. For those interested, the full report on the AIA process can be accessed from the project page.

In conclusion, the AIA for NMIP imaging data access requires multidisciplinary risk identification, use of a healthcare-adapted enterprise risk management model, extensive stakeholder collaboration, and thorough risk mitigation and documentation aligned with NHS AI Lab guidance to ensure safe, legal, and effective AI deployment in medical imaging.

- The Algorithmic Impact Assessment (AIA) process, as described in the user guide, involves applying technology in the domain of health-and-wellness by using a comprehensive risk assessment and mitigation approach, known as AIA, which is rooted in science and adheres to ethical, legal, operational, and patient safety considerations.

- By leveraging the AIA process, teams can ensure that their use of science and technology in health-and-wellness research, such as training new medical products or testing existing ones, will meet the necessary regulations and guidelines set by the NHS AI Lab, while minimizing risks and maintaining patient safety when working with the National Medical Imaging Platform (NMIP) dataset.