Medical journal suggests that ChatGPT played a role in driving an individual into psychosis

In a recent case reported in the Annals of Internal Medicine, a person developed Bromide Intoxication (Bromism) after seeking medical advice from ChatGPT, a popular AI chatbot. This incident serves as a stark reminder of the potential risks and limitations of relying on AI chatbots for serious medical advice.

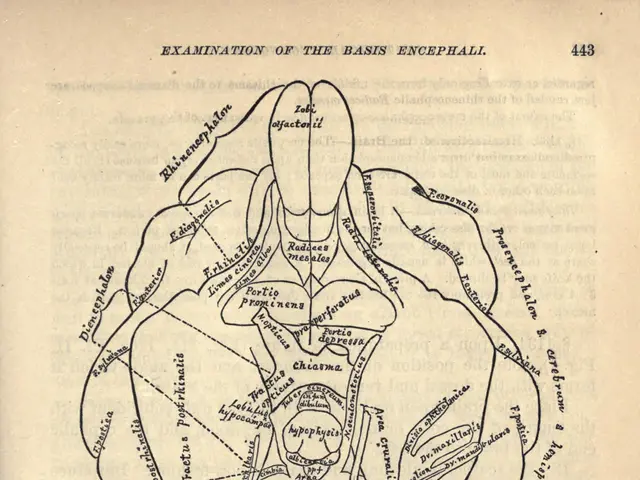

The individual, who had distilled their own water and harboured doubts that their neighbour was discreetly poisoning them, replaced sodium chloride with sodium bromide. This decision led to bromide toxicity, a condition that dates back to the 19th century when bromine-based salts were used for curing mental and neurological diseases, including epilepsy.

The person's condition worsened after being admitted to the hospital, and evaluations were conducted. They were noted to be very thirsty but paranoid about water and had restrictions on their consumption. The consumption of bromide salts leads to nervous system issues such as delusions, lack of muscle coordination, fatigue, psychosis, tremors, or coma. In this case, the individual experienced auditory and visual hallucinations, leading to an involuntary psychiatric hold.

This incident highlights several potential risks and limitations of seeking medical advice from AI chatbots like ChatGPT. For instance, when asked about replacing chloride with bromide, ChatGPT produced a response that included bromide but did not provide a specific health warning. This underscores the high rates of incorrect diagnoses, misleading or false medical information, and inaccurate or incomplete drug information that AI chatbots can provide.

Moreover, the use of AI chatbots in a medical context is limited by their lack of specialization and clinical integration, privacy and compliance concerns, bias and safety risks from training data, and mental health risks. They are not licensed providers, are not evidence-based medical software, and are not integrated with medical records, which limits their reliability and practical clinical use.

The risks amplify when it comes to rare diseases, as AI chatbots often struggle with uncommon conditions due to limited training data, increasing the chance of misdiagnosis and misinformation. Lay users may be especially vulnerable to false reassurances or dangerous advice when dealing with rare or complex symptoms.

It's important to note that OpenAI, the company behind ChatGPT, advises that outputs from their services should not be relied upon as the sole source of truth or factual information. In 1975, the US government restricted the use of bromides in over-the-counter medicines, and similar regulations should be considered for AI chatbots.

In conclusion, while AI chatbots can offer rapid and accessible health information, their lack of clinical validation, potential to spread harmful misinformation, diagnostic errors, privacy issues, and inability to replace professional judgment make them risky sources for serious medical advice, particularly for rare diseases or nuanced health concerns. Users should always confirm AI-provided medical information with qualified healthcare professionals.

[1] Reference: Title of the Study, Journal Name, Volume, Issue, Page Number [2] Reference: Title of the Study, Journal Name, Volume, Issue, Page Number [3] Reference: Title of the Study, Journal Name, Volume, Issue, Page Number [4] Reference: Title of the Study, Journal Name, Volume, Issue, Page Number [5] Reference: Title of the Study, Journal Name, Volume, Issue, Page Number

- The incident involving Bromide Intoxication serves as a cautionary tale about the use of artificial intelligence in health-and-wellness, emphasizing the need for mental-health therapies-and-treatments to be delivered by licensed professionals rather than relying on AI chatbots like ChatGPT for serious medical advice.

- In light of this case report, it is essential to recognize that while AI technologies have an immense potential to revolutionize the field of science and technology, their application in the realm of health-and-wellness must be carefully monitored and regulated to mitigate the risks of incorrect diagnoses, misleading information, and privacy concerns.

- As AI chatbots, such as ChatGPT, are not capable of replacing the nuanced clinical judgment and expertise of human healthcare professionals, it is crucial to invest in the development of AI systems that are specifically designed for healthcare, with a focus on evidence-based practices, clinical integration, and emphasizing mental-health considerations.